Table of contents

This is the fourth post in the series about effortless performance improvements in C++. Check the first post to get the full story!

Last

time

we added a call to std::vector::reserve in tokenize to preallocate

the memory we needed, and got 15% performance improvements. After

these changes we observed that the tokenize function was still a top

hotspot, mostly due to calls to std::string(const std::string&) and

std::string::substr(). How can we improve that?

What’s an std::string anyway

In short, std::string is a fancy std::vector<char>, with a similar

reallocation policy. Regarding allocations, std::string has an extra

feature in the form of the Short String Optimization (SSO) which

allows not to use dynamic allocations for relatively short strings

(around 20 characters or less).

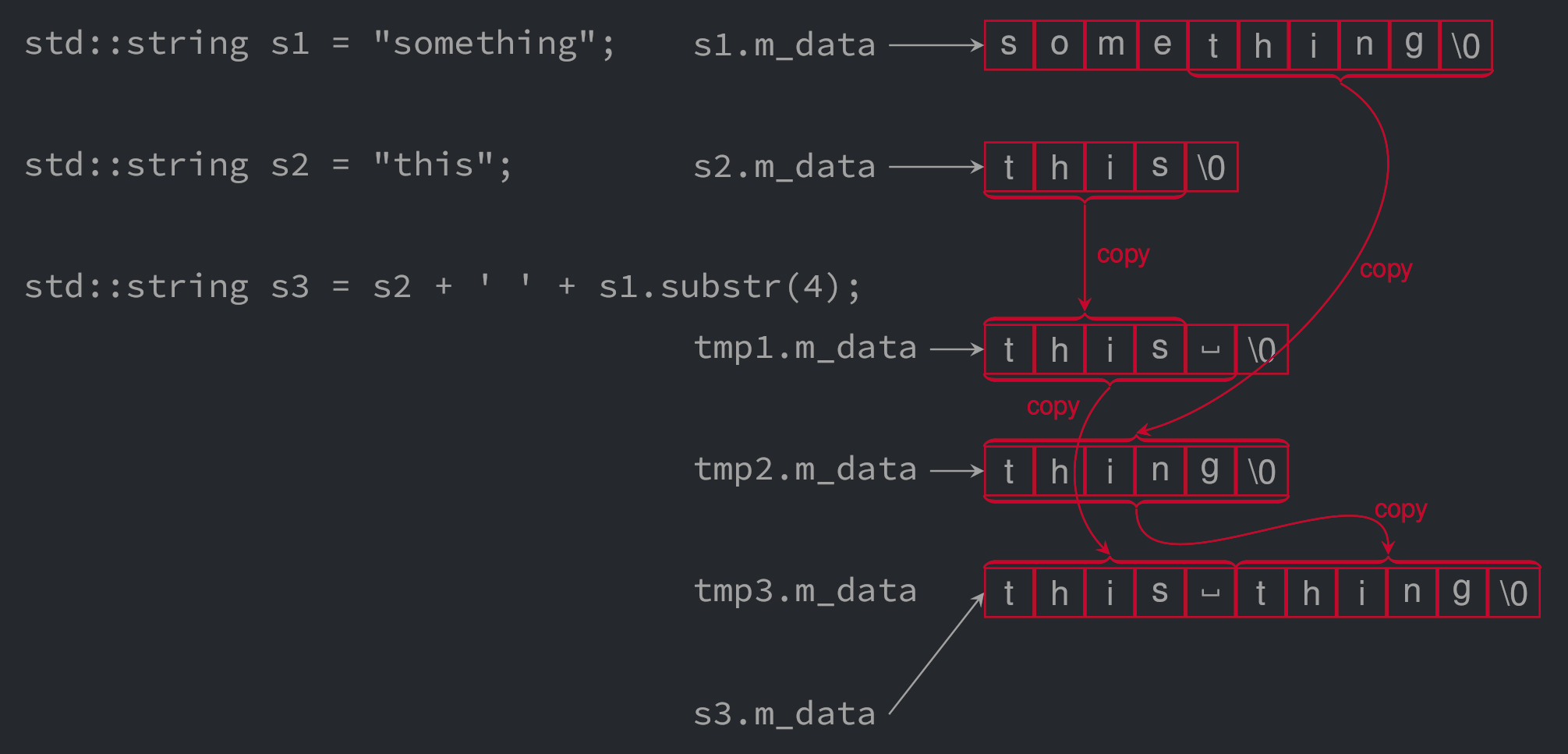

It is very easy to produce many, many, maaaaany, allocations when operating on strings. Let’s unroll a small example to better understand what is going on (ignoring SSO for didactic purposes). Consider the following code:

std::string s1 = "something";

std::string s2 = "this";

std::string s3 = s2 + ' ' + s1.substr(4); // "this thing"

Only three statements, let’s see what is going on behind the scene. If

m_data is the pointer to the buffer inside std::string, we can

track the allocations and copies with the following drawing.

Let’s begin with the initialization of s1. In order to put the

C-string “something” into s1 there is an allocation for s1’s

internal buffer, then the C-string is copied into this buffer.

The same goes for s2: one allocation for the buffer, then one copy

of the C-string (again, without considering SSO).

Things become a bit more complex for s3. First, a temporary string

tmp1 is created to store the concatenation of s2 and a space. The

internal buffer of the temporary string requires an allocation, then

s2 is copied into the buffer, and finally the space and the null

character are added at the end of the buffer.

Second step, the call to s1.substr(4) creates another temporary

string tmp2. Again, an allocation is done for its internal buffer,

then the sub-string begining with the fifth character of s1 is

copied into tmp2.

Last step before the assigment, tmp1 and tmp2 are concatenated. A

new temporary string tmp3 is created to receive the result, with an

allocation for its internal buffer. Then tmp1 is copied in tmp3,

followed by tmp2.

Finally, the internals of tmp3 are moved into s3.

All in all, behind these three statements, we had five dynamic

allocations, and six calls to strcpy.

Avoiding string allocations and copies

Just like with std::vector, an easy solution to save on allocations

is to call std::string::reserve() and allocate once and for all

before filling the string. It won’t help for temporary strings

though. The other key is to use std::string::operator+=() to

concatenate inplace. When these rules are applied to the previous

example the code becomes:

std::string s1 = "something";

std::string s2 = "this";

std::string s3;

s3.reserve(11); // Large enough to contain the final string.

s3 += s2; // Append inplace, no allocation.

s3 += ' '; // Append inplace, no allocation.

s3 += s1.substr(4); // Append inplace, no allocation.

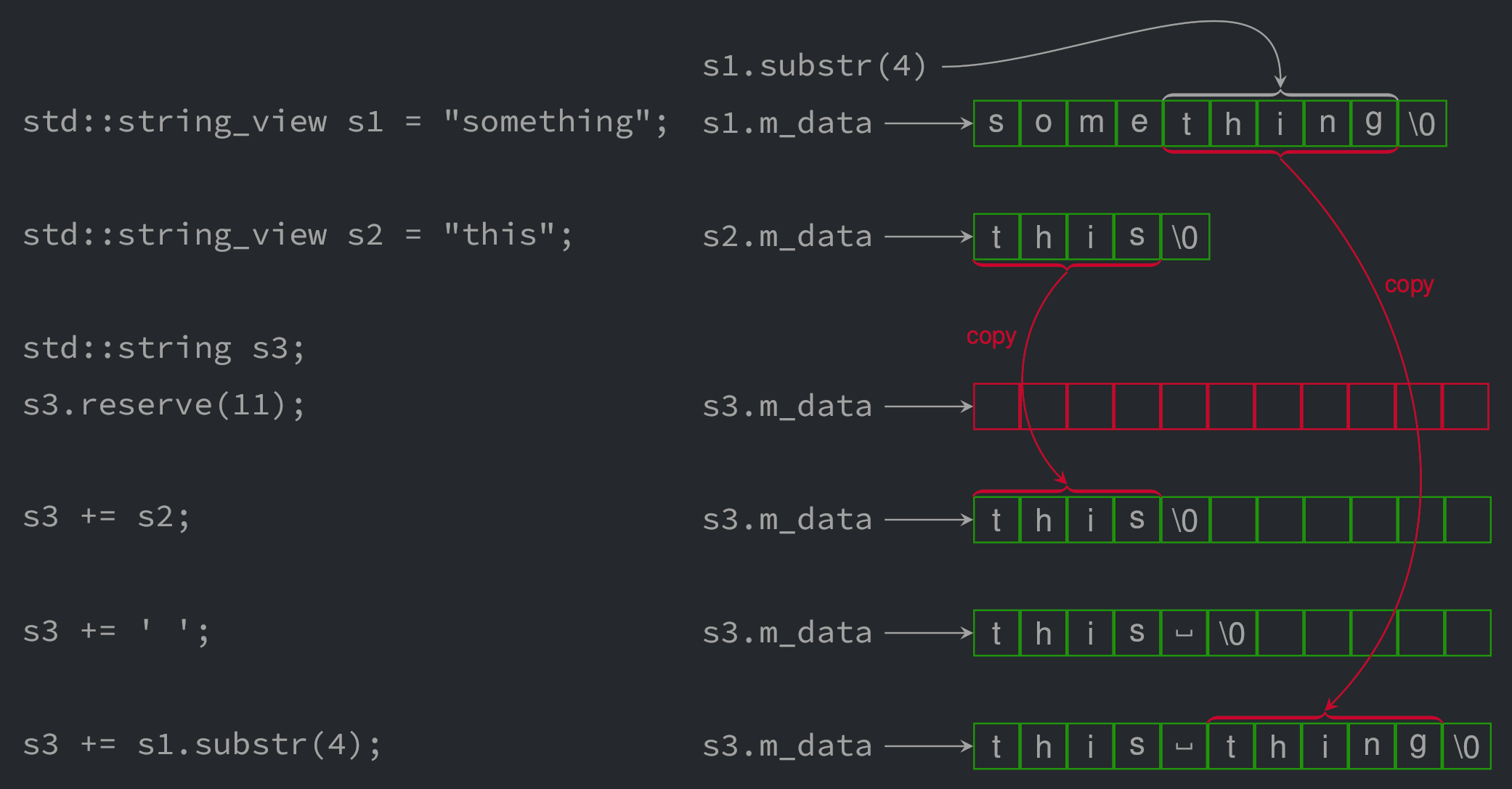

Additionally, our program can benefit from std::string_view,

provided by the standard library since C++17 (if you cannot use C++17,

know that there are many standalone implementations of this class

available on the web). An instance of std::string_view represents a

slice on a string-like object. It contains a const char* it does not

own, as well as a size. And that’s all. Note that if a

std::string_view is effectively a substring of another string, it

does not necessarily ends with the null character. Consequently, all

operations on its data() must be done within the limits of its

size().

The interface of std::string_view mimics a subset of the interface

of std::string. In the program we are studying today we are

interested with find() and substr().

Let’s see how std::string_view can help in our toy example with the

following drawing:

First of all, by declaring s1 and s2 as std::string_views, there

is no need to allocate a buffer and to copy the C-string; the

variables point directly to the C-strings. The only allocation here is

when we call s3.reserve(11), then all concatenations are done in

this buffer.

In the construction of the final string s3 we have a copy of s2’s

internal buffer into s3. Then a space is added at the end of

s3. Interestingly, calling s1.substr(4) does no allocation nor

copy s1’s data. This is just a temporary std::string_view

pointing somewhere in the same buffer and with a different

size. Finally, this temporary is copied at the end of s3.

We computed the same string than in the previous section, except that

we used a single dynamic allocation instead of five, and only two

calls to strcpy instead of six. Does it matter? Let’s check.

Implementing tokenize with std::string_view

Since tokenize does nothing else than extracting sub-parts from its

input string, it is the perfect candidate for a switch to

std::string_view. The change is simple:

-std::vector<std::string> tokenize(const std::string& s)

+std::vector<std::string_view> tokenize(const std::string& s)

{

- std::vector<std::string> result;

+ std::vector<std::string_view> result;

// Expect four fields in our input.

-10,2 +10,3

std::string::size_type colon = s.find(':');

+ const char* const c_str = s.c_str();

-13,3 +14,3

{

- result.push_back(s.substr(from, colon - from));

+ result.push_back(std::string_view(c_str + from, colon - from));

from = colon + 1;

-18,3 +19,3

- result.push_back(s.substr(from));

+ result.push_back(std::string_view(c_str + from, c_str + s.size()));

There are two important side effects to this change. First of all, the

signature has changed, impacting the call site and the way we manage

the tokens. In particular, concatenate_tokens has to reconstruct the

string from the std::string_views:

static std::string concatenate_tokens

-(const std::vector<std::string>& tokens)

+(const std::vector<std::string_view>& tokens)

{

std::string result;

- for (const std::string& token : tokens)

+ for (const std::string_view& token : tokens)

if (result.empty())

- result = token;

+ result = std::string(token.begin(), token.end());

else

- result += ":" + token;

+ result += ":" + std::string(token.begin(), token.end());

return result;

}

Secondly, the domain of application of tokenize has changed. By

returning instances of std::string_view pointing on the input

string, we implicitly expect the argument to live longer than the

returned objects. Previously the function returned independent

strings, so it did not matter from where the argument was coming. In

particular, code like this was previously valid and will now fail:

std::vector<std::string_view> v1 = tokenize("a:b:c:d");

// Passing "a:b:c:d" as the argument constructs a temporary

// std::string which is destroyed after the construction of v1.

// Consequently v1 contains pointers to destroyed data.

std::string get_token_string();

// A function that returns a string by value.

std::vector<std::string_view> v2 = tokenize(get_token_string());

// Here get_tokens_string() returns a temporary string, destroyed

// after the construction of v2. Thus v2 contains pointers to

// destroyed data.

Edit: as u/HabbitBaggins pointed in the Reddit thread, a solution to

avoid calling tokenize on a temporary string would be to delete the

corresponding overload:

std::vector<std::string_view> tokenize(std::string&& s) = delete;

End of edit.

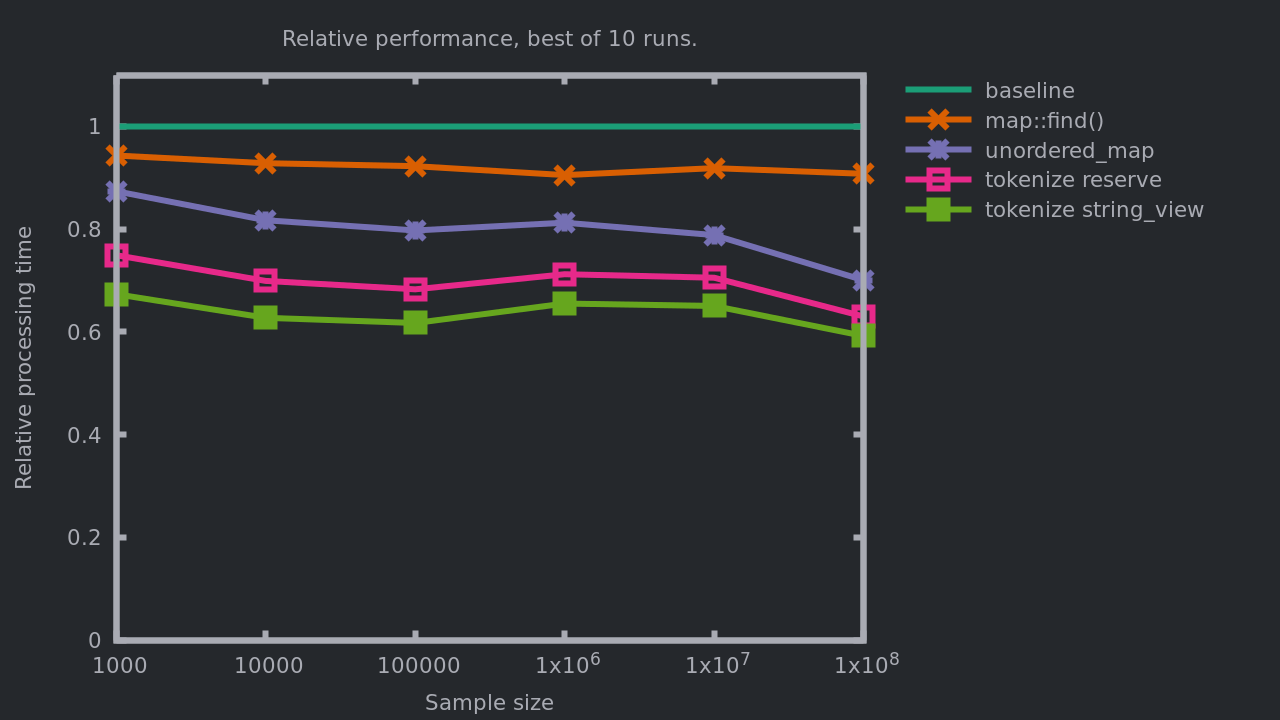

That being said, let’s look at the impact of this new implementation on our program.

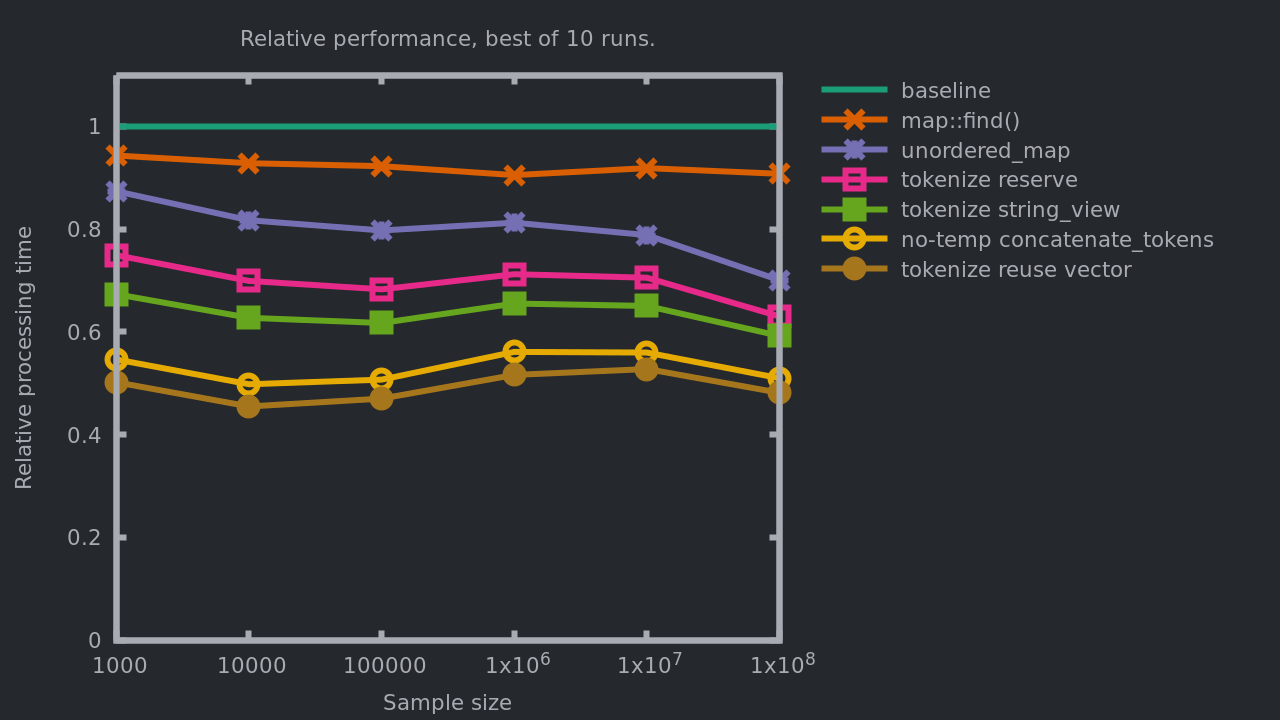

We saved up to 41% of the processing time relatively to the initial

implementation, and between 6 to 11% relatively to the previous

implementation! Let’s do a pass of perf:

- 19% in

std::unordered_map<std::string, std::string>::operator[]. - 14% in

std::unordered_map<std::string, std::string>::find. - 9% in

concatenate_tokens- Mostly in

std::string::operator+

- Mostly in

- 8% in

replace.- With

std::string::findas the top offender.

- With

- 7% in

std::getline. - 7% in

tokenize.

We managed to get a nice performance gain in tokenize but we

increased the cost of concatenate_tokens! Even though the global

performance is better, it is a bit disappointing. Since the function reached the top-three bottlenecks, let’s see if we can do something about it.

Even fewer allocations

The function concatenate_tokens builds an std::string from the

instances of std::string_view it has been passed; the std::string

is then returned. Contrary to tokenize we have to build the string,

but one can observe that the returned string is just concatenated at

the end of the result string built on the call site (in the service

function).

We previously saw that the allocations and copies have a huge impact

on the overall performance, so we are going to focus on that. What if

we skip the construction of the string in concatenate_tokens and

write directly in service’s result, i.e. what if we use an output

parameter?

static void concatenate_tokens

(std::string& result, // output parameter

const std::vector<std::string_view>& tokens)

{

result.append(tokens[0]);

for (std::size_t i = 1, n = tokens.size(); i != n; ++i)

{

// Append characters individually, no temporary std::string.

result += ':';

result.append(tokens[i]);

}

result += '\n';

}

std::string service(const std::string& in)

{

// Assume the output is not larger than the input.

result.reserve(in.size());

/* … */

concatenate_tokens(result, /* … */ );

}

The function concatenate_tokens now accepts a reference to an

std::string as its first argument. It begins by appending the first

token at the end of this string, then the remaining tokens are

appended too, each one prefixed by a colon. Finally, a line break is

inserted at the end. The main function reserves enough memory to

contain the whole input.

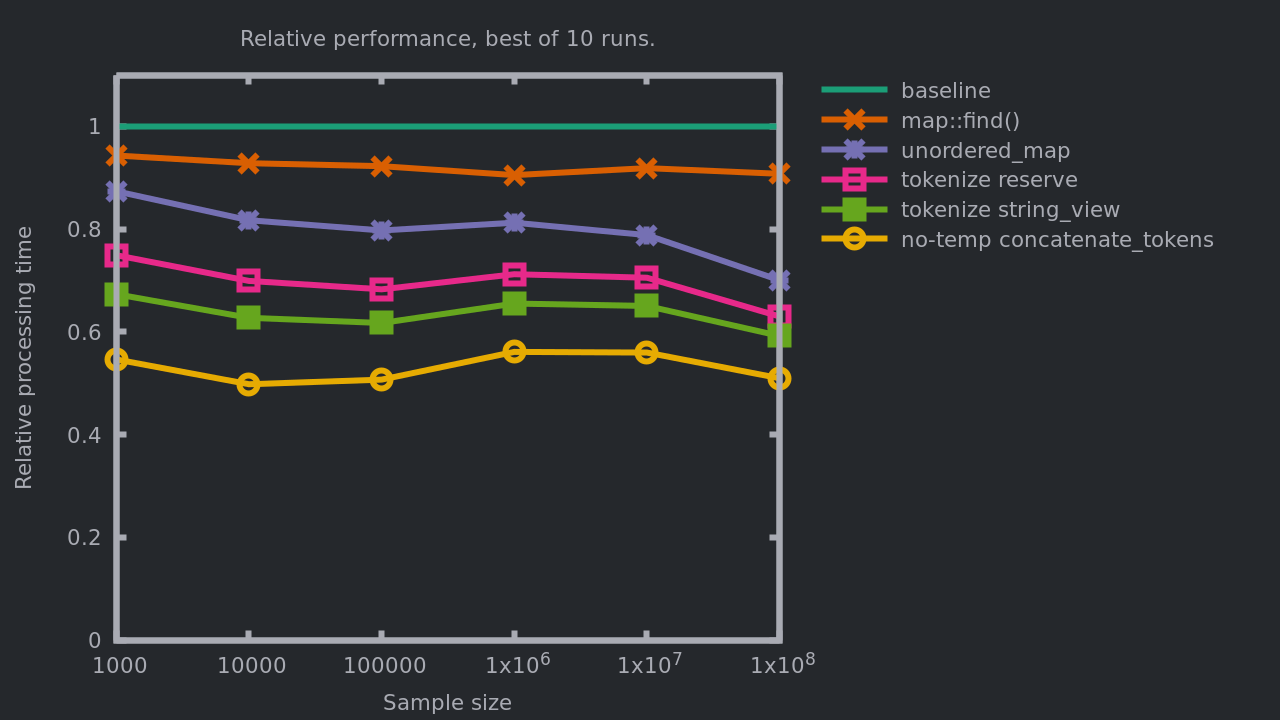

Let’s measure the effect on the processing time.

Avoiding temporary strings saved us 14 to 21% of the processing time, relatively to the previous implementation. This is also the first time that we are dividing the processing time by two relatively to the initial implementation, with a gain between 43 and 50%.

Output arguments are quite unpopular nowadays, especially with the

advances in Return Value Optimization (RVO), but here we see that they

can be quite beneficial. Hey, while we are at it, can you think of

another function where we could use an output argument? What about

tokenize?

void tokenize

(std::vector<std::string_view>& result, const std::string& s)

{

result.clear();

/* ... */

}

std::string service(const std::string& in)

{

/* ... */

std::vector<std::string_view> tokens;

// Expect at most four fields per line in our input.

tokens.reserve(4);

/* ... */

while (std::getline(iss, line))

{

tokenize(tokens, line);

/* ... */

}

/* ... */

}

Interestingly, the call to tokens.reserve(4) is probably not

required anymore. Since we are reusing the allocation for all

iterations, we could have let the default growth policy do its job for

a fixed cost of three allocations for the internals of tokens.

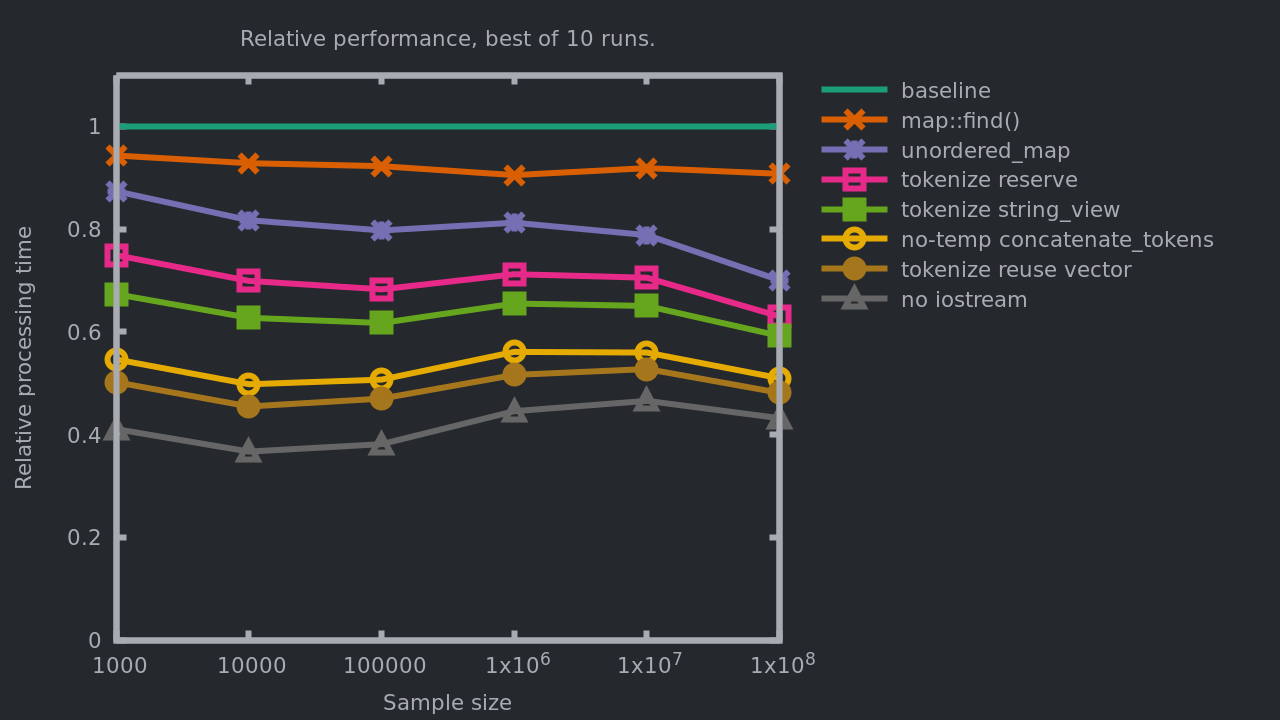

The measures:

This is another 5 to 9% gain relatively to the previous implementation, and 47 to 55% relatively to the initial implementation.

Let’s look at the output of perf:

- 23% in

std::unordered_map<std::string, std::string>::operator[]. - 16% in

std::unordered_map<std::string, std::string>::find. - 9% in

replace.- With

std::string::findas the top offender.

- With

- 8% in

std::getline. - 8% in

tokenize, - 4% in

concatenate_tokens

Great, the last changes moved concatenate_tokens way down. The next

step would be replace, but first I would like to discuss about

std::getline. In its current shape the program mixes

std::string_view and std::string, making it cumbersome in some

parts, especially in the interface of tokenize. We will see below

how we can avoid std::getline, use more std::string_view, and get

even more performance gain, and yet have cleaner code.

Avoiding IO streams

The input/output library from the STL (i.e. <iostream>, <fstream>,

<stream>, and friends) is well known for having poor

performance. Yet, it is the go-to solution to process formatted

streams in C++. In our example, the specs describe the input as “one

record per line”, so it seems natural to pick std::getline to

extract the records from the input. Also, the input being an

std::string we just have to wrap it in an std::istringstream and

through the magic of well designed interfaces everything converges

toward an easy to understand algorithm:

std::istringstream iss(input);

std::string line

while (std::getline(iss, line))

// process one line

Hey, we could even process any stream with that! std::ifstream,

std::cin, isn’t it cool?

Let’s take a step back and unroll what we did. We are given an

std::string containing all the rows we need to process. This string

is immutable, it cannot change during our function. Every record we

have to handle is already present in this string, already in memory,

and they are separated by a '\n'.

The solution we chose is to copy this string in a stringstream

(i.e. one allocation plus one large memcpy), then to scan this stream

for '\n's and to copy, again, segments of this stream into

another string (i.e. we have stream management, allocation and memcpy

for each record). In other words, we have many allocations, maybe one

for each record, and the equivalent of two copies of the whole input.

What we have described in the previous paragraph is an algorithm to

extract substrings almost verbatim from another string, and covering

the entirety of this string. I say “almost verbatim” because the only

change with the input string is that '\n's are turned into '\0's.

Following what we have seen so far you should have guessed by now that

std::string_view is a solution here. Consider this alternative to

std::getline:

static std::string_view next_line(const char* &p, const char* last)

{

const char* end = std::find(p, last, '\n');

std::string_view result(p, end - p);

if (end == last)

p = end;

else

p = end + 1;

return result;

}

The next_line function takes two arguments. The first one is a

pointer to the beginning of a C-string, passed by address. The second

one is a pointer past the end of the same C-string (note that we could

have passed an std::string_view& directly to avoid implicit

dependency between the arguments). The function scans the C-string for

the first line break then builds an std::string_view with the

segment separating it from the beginning of the string. Then the

pointer to the beginning of the C-string is updated to point to the

first character following the line break, or to the end of the

C-string if there is no character left. Finally, the

std::string_view is returned.

We can update our main function to use this function instead of

std::getline:

std::string service(const std::string& in)

{

const char* cursor = in.c_str();

const char* const in_end = cursor + in.size();

while (cursor != in_end)

{

const std::string_view line = next_line(cursor, in_end);

/* … */

}

}

As a nice side effect, this also simplifies tokenize():

void tokenize

-(std::vector<std::string_view>& result, const std::string& s)

+(std::vector<std::string_view>& result, const std::string_view& s)

{

result.clear();

- std::string::size_type from = 0;

- std::string::size_type colon = s.find(':');

- const char* const c_str = s.c_str();

+ std::string_view::size_type from = 0;

+ std::string_view::size_type colon = s.find(':');

- while (colon != std::string::npos)

+ while (colon != std::string_view::npos)

{

- result.push_back(std::string_view(c_str + from, colon - from));

+ result.push_back(s.substr(from, colon - from));

from = colon + 1;

colon = s.find(':', from);

}

- result.push_back(std::string_view(c_str + from, s.size() - from));

+ result.push_back(s.substr(from));

}

As usual, a graph of the impact on the performance:

By replacing the stream-based parsing with an ad-hoc scan for end of

lines, and also by working with std::string_view instead of

std::string, we got an additional 10 to 19% performance gain,

compared with the previous implementation. Combined, all changes so

far make between 53 and 63% improvement of the initial processing

time. We divided the cost of the algorithm by two.

Let’s look at the output of perf:

- 26% in

std::unordered_map<std::string, std::string>::operator[]. - 18% in

std::unordered_map<std::string, std::string>::find. - 10% in

replace.- With

std::string::findas the top offender.

- With

- 9% in

tokenize. - 4% in

concatenate_tokens.

Funny thing, between the end of the previous page and this paragraph,

the relative cost of std::unordered_map went from 32% to 44%. It is

no surprise since we reduced the overall processing time by improving

everything but this, but since it is a large portion of the processing

time, maybe we could… you know…

std::unordered_map of std::string_view

In order to handle the "set" and "value" records, we are still

building instances of std::string from the std::string_views

returned by tokenize so we can use them in our map. If we have

learned anything in this post, it is that we should avoid

std::string if we can, so what if we store the std::string_view

directly in our maps instead? As you can see below, it makes the code

a bit lighter.

std::string service(const std::string& in)

{

/* … */

using entry_map =

std::unordered_map<std::string_view, std::string_view>;

while (cursor != in_end)

{

/* … */

else if (tokens[0] == "set")

entries[tokens[1]] = tokens[2];

else if (tokens[0] == "value")

{

const entry_map::const_iterator it =

entries.find(tokens[1]);

if (it != entries.end())

tokens[1] = it->second;

else

{

static constexpr std::string_view empty

(nullptr, std::size_t(0));

tokens[1] = empty;

}

/* … */

}

/* … */

}

/* … */

}

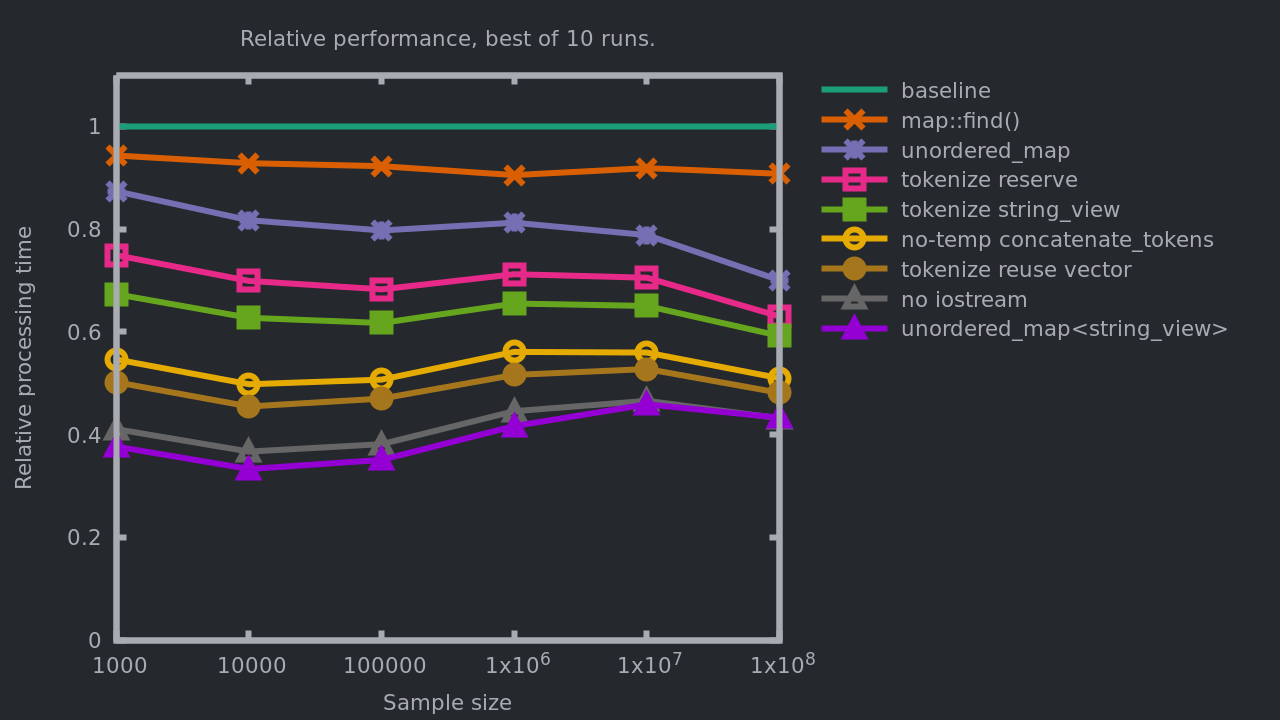

The graph of the impact on the performance:

Interestingly, the modification has no significant impact on the largest instances. Otherwise it’s a win, even if it is not a huge one (and the code is simpler!). We have up to 9% gain relatively to the previous implementation, and we are reaching between 54 and 67% with respect to the initial implementation.

Back to the perf bottlenecks:

- 24% in

std::unordered_map<std::string_view, std::string_view>::operator[]. - 23% in

std::unordered_map<std::string_view, std::string_view>::find. - 10% in

replace.- With

std::string::findas the top offender.

- With

- 9% in

tokenize. - 5% in

concatenate_tokens.

The relative cost of std::unordered_map is going up again! This

means that the the savings we have just made come from what was around

it, i.e. from the removal of the std::strings built especially for

the map. Maybe these results are hiding a worsening, like if a map of

std::string_view was less efficient than a map of std::string?

Since the share of std::unordered_map::operator[] is actually a bit

down and since the call to find precedently required a temporary

std::string that we have just removed, I doubt there is any

worsening here; but you may want to measure to confirm.

This post required more effort than we had to do in the previous

ones. I consider it to be an example where taking the simple path and

expecting optimizations to happen later on the project is actually

quite expensive: It would have been cheaper and actually simpler to

use std::string_view from the beginning.

In the next and final

part we will

focus on the replace function, and also come back on these maps!